Kettle (PDI) and Project Hop

VISUALLY DESIGN POWERFUL DATA PIPELINES

Update: To make sure we can continue to serve our customers the best we can, we're heavily involved in Apache Hop, which started from the Kettle code base, but will focus on innovative data engineering. Read more we helped to launch Project Hop, which later become Apache Hop and is now a Top-Level Project at the Apache Software Foundation. Discover how your PDI/Kettle projects can live on in Apache Hop.

Data Engineering

Data comes in a large variety of sources, from different sources and often in different quality levels. To solidly retrieve results from data, a lot of preparatory work is required.

Not necessarily related to a project's goal or the desired solution (data warehousing, big data analytics, machine learning), a general assumption is that 80% of the time is spent on data preparation. Data engineering is a broad term that covers areas like data acquisition, linking data sets, data cleaning and the actual loading of data into the desired format or target platform.

Visual Development

To be efficient at data preparation, having the right tools for the task is crucial. Kettle (also known as Pentaho Data Integration or PDI) is an open source data integration platform with over 15 years of history.

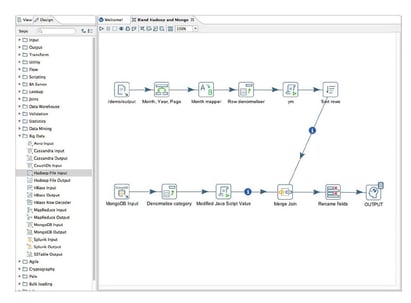

Kettle allows to visually develop data streams or pipelines. After the initial development, the Kettle code is managed as software, including version control, testing, CI/CD etc.

Additionally, visual development allows developers, data engineers and data scientists to focus on what needs to be done, not on how to prepare the data.

Kettle

Kettle supports a large number of data formats, is able to talk to every significant data platform in the market, and has extensive options to build scalable and extendable solutions.

- access to databases, file formats (CSV, JSON, YAML, Office, ...), integration with Kafka, AWS Kinesis, Google Pub/Sub, various IoT protocols, orchestration of data science and machine learning algorithms and more.

- integration and combination of data through aggregations, lookups, joins, ...

- execution of data pipelines can not only run in the native Kettle engine, but through the Apache Beam integration, can also run on Apache Spark, Apache Flink etc.

- scalable: through a highly configurable engine, Kettle is able to process small to huge amounts of data, in batch, streaming and/or real-time mode

- extendable: Kettle's standard functionality can be extended even further by adding plugins from a large offering, or by writing your own plugins.

know.bi has been involved in the development of Kettle from a very early stage. We know the platform inside out and can maximize your return on investment. Apart from standard services, we can provide help in tailoring the Kettle platform to your needs.